15. Quiz: TensorFlow Dropout

TensorFlow Dropout

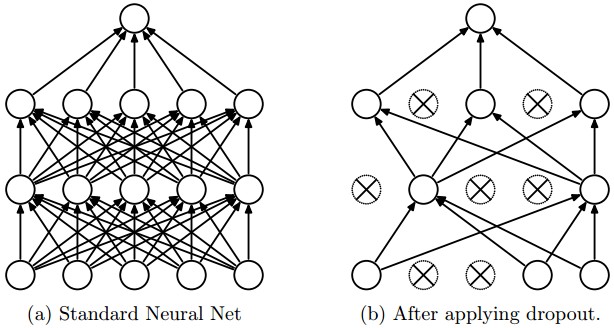

Figure 1: Taken from the paper "Dropout: A Simple Way to Prevent Neural Networks from

Overfitting" (https://www.cs.toronto.edu/~hinton/absps/JMLRdropout.pdf)

Dropout is a regularization technique for reducing overfitting. The technique temporarily drops units (artificial neurons) from the network, along with all of those units' incoming and outgoing connections. Figure 1 illustrates how dropout works.

TensorFlow provides the tf.nn.dropout() function, which you can use to implement dropout.

Let's look at an example of how to use tf.nn.dropout().

keep_prob = tf.placeholder(tf.float32) # probability to keep units

hidden_layer = tf.add(tf.matmul(features, weights[0]), biases[0])

hidden_layer = tf.nn.relu(hidden_layer)

hidden_layer = tf.nn.dropout(hidden_layer, keep_prob)

logits = tf.add(tf.matmul(hidden_layer, weights[1]), biases[1])The code above illustrates how to apply dropout to a neural network.

The tf.nn.dropout() function takes in two parameters:

hidden_layer: the tensor to which you would like to apply dropoutkeep_prob: the probability of keeping (i.e. not dropping) any given unit

keep_prob allows you to adjust the number of units to drop. In order to compensate for dropped units, tf.nn.dropout() multiplies all units that are kept (i.e. not dropped) by 1/keep_prob.

During training, a good starting value for keep_prob is 0.5.

During testing, use a keep_prob value of 1.0 to keep all units and maximize the power of the model.

Quiz 1

Take a look at the code snippet below. Do you see what's wrong?

There's nothing wrong with the syntax, however the test accuracy is extremely low.

...

keep_prob = tf.placeholder(tf.float32) # probability to keep units

hidden_layer = tf.add(tf.matmul(features, weights[0]), biases[0])

hidden_layer = tf.nn.relu(hidden_layer)

hidden_layer = tf.nn.dropout(hidden_layer, keep_prob)

logits = tf.add(tf.matmul(hidden_layer, weights[1]), biases[1])

...

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

for epoch_i in range(epochs):

for batch_i in range(batches):

....

sess.run(optimizer, feed_dict={

features: batch_features,

labels: batch_labels,

keep_prob: 0.5})

validation_accuracy = sess.run(accuracy, feed_dict={

features: test_features,

labels: test_labels,

keep_prob: 0.5})SOLUTION:

keep_prob should be set to 1.0 when evaluating validation accuracy.Quiz 2

This quiz will be starting with the code from the ReLU Quiz and applying a dropout layer. Build a model with a ReLU layer and dropout layer using the keep_prob placeholder to pass in a probability of 0.5. Print the logits from the model.

Note: Output will be different every time the code is run. This is caused by dropout randomizing the units it drops.

Start Quiz:

User's Answer:

(Note: The answer done by the user is not guaranteed to be correct)